This is the second in a series of guest posts by Andrew Tate, a writer turned software developer at Animalz. He has been using using PullRequest code review to help improve their first product, Revive, and learn more about development.

- 3 Lessons Learned from My First Week of Code Review

- Learning Security One Code Review at a Time

- Solving Problems Collaboratively Through Code Review

One of the biggest challenges of being a new developer working alone or on a small team is managing uncertainty; you don’t know what you don’t know. But one ‘unknown’ towers over the rest in terms of risk and severity: making sure the code you’re writing is secure.

Anything consumer-facing introduces security risk. Collecting some user data is inevitable in most applications. From user login to billing preferences, different applications need to keep track of varying customer information. Despite the sophistication of your technology stack, attacks such as DDOS, spoofing, or hacks can still take it down.

Add to this the fact that speed is critical for small teams. Taking time to learn about fixes for security issues isn’t the priority.

A great option to minimize security risk without impacting your team’s workflow is to leverage experts through external code review. As Lyal puts it, “An experienced engineer trained to look for security issues can prevent vulnerabilities from being introduced in ways that would be much harder to catch otherwise.”

With expert reviewers looking at your code, you have immediate insight into the security holes, and you have access to people who can help you fix them. Here is how external code review is helping me overcome this problem right now.

Better security makes for a better product

There are three paths a user can take in your app.

They can take the happy path. This is the path you planned for them to take. In a payment app, you would validate the card number and return a success page. But sometimes things go wrong, and users can end up on the sad path. This would be the number being entered incorrectly or not matching the security code. You can catch sad paths with exceptions.

Then there is the evil path. This is the path where a bad actor tries to make the app crash or tries to get access to data they shouldn’t. Better security is mostly concerned with blocking the evil path. But the side effects bleed into the happy and sad paths.

Here is a comment left on my first PR:

I’m assuming lib.parsing is a custom implementation of a spider. The implementation should take care to have timeouts when fetch the URL content, validate the content size of the URL is of reasonable limits, and make attempts to not store any data retrieved from the URL. These can all lead to security issues like DDOS, memory overflow issues, etc.

—Code reviewer Shahrukh S.

lib.parsing is exactly that. My initial function read the html from a url:

import urllib.request

def getHtmlString(url):

hdrs = {'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_5) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/50.0.2661.102 Safari/537.36', 'accept-encoding': 'identity'}

req = urllib.request.Request(url, headers=hdrs)

resp = urllib.request.urlopen(req)

htmlBytes = resp.read()

htmlString = htmlBytes.decode("utf-8")

return htmlString

This does what I want it to: open a url, read it, then decode into an html string. It is a pretty simple web scraper. To write this code, I did what any self-respecting new (and old) developer would do—I copied it from Stack Overflow.

Stack Overflow, code snippets, tutorials, and docs are all vital to developers, especially those not working within a team. Often though, many posts include only the essential tidbits for solving the OP’s specific problem. The post that the code came from was about a web scraper, so that is what I got. It wasn’t about a secure web scraper, so I couldn’t expect much more than that.

I am building this as part of an internal tool that is only used by me, so I didn’t really consider any security risks. But the comment from the reviewer is still pertinent. Even if I know the url:

- It might still time out and lock up the app.

- I don’t know the size of the entire page.

- The returned contents are stored.

Added to that, the function is called recursively as the crawler goes deeper into a website. I might know the initial url but not the urls deep down in a site. This is where trying to block the evil path helps mitigate the sad path. The reviewer is concerned about nefarious actors, but he has also helped me write code that performs better for its userbase—in this case, just me. A reviewer’s goal is to ensure higher quality code, regardless of how small the scope is.

In my next PR, this became:

import urllib.request

from urllib.error import URLError

import logging

from socket import timeout

MAX_TIMEOUT = 10

MAX_CONTENT_LENGTH = 1000000

def getHtmlString(url):

hdrs = {'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_5) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/50.0.2661.102 Safari/537.36'}

req = urllib.request.Request(url, headers=hdrs)

try:

resp = urllib.request.urlopen(req, timeout=MAX_TIMEOUT)

except URLError as e:

if hasattr(e, 'reason'):

logging.error('We failed to reach a server.')

logging.error('Reason: %s', e.reason)

elif hasattr(e, 'code'):

logging.error('The server couldn\'t fulfill the request.')

logging.error('Error code: %s', e.code)

except timeout:

logging.error('socket timed out - URL %s', url)

else:

if int(resp.info()['content-length']) > MAX_CONTENT_LENGTH:

logging.debug('Page too big - skipping')

htmlString = None

else:

print('here')

htmlBytes = resp.read()

htmlString = htmlBytes.decode("utf-8")

return htmlString

The main components are still there. A request object is created with a custom header, which is then supplied to urlopen to open. But now it has “timeouts when fetch[ing] the URL content” and “validate[s] the content size of the URL is of reasonable limits.” It continues to store the retrieved html string in a variable, but two of the initial concerns of the reviewer are dealt with in just two lines.

Look at how these two changes help mitigate a few evil paths. The timeout means that if a url doesn’t return data in a reasonable time, the whole thing won’t come grinding to a halt. The check on content size means that even if the function is accidentally passed gfycat.com, it won’t stall.

Though the review was concerned with security issues, the evil path, their comments have also helped the sad path and the happy path. To eliminate timeouts not just from the call to the url but also from other request errors, I have added a few exceptions with a try/except/else block. This means that a broken URL won’t stop the crawler from running. Additionally, the information from these errors can help diagnose problems with the pages we are crawling—the aim of the app—helping the end user, aka, yours truly!

Did this get an LGTM? Oh no.

Better security makes for a better developer

For security purposes, it is a good idea to verify the URL input as well. The URL length, maximum number of redirects, whitelist or basic matching (not internal, private IP, etc) of the URL host.

—Code reviewer Shahrukh S.

Once I fixed those issues, the reviewer found other security issues within the code. This can seem like a disheartening never-ending story, but it is the story of learning. On each learning iteration, I learn more about security and good coding practice.

Because this is a Django app, I can learn on the in-built methods within Django for some of the validation:

from django import forms

class UrlForm(forms.Form):

url = forms.URLField(max_length=200)

def clean(self):

cleaned_data = super(UrlForm, self).clean()

url = cleaned_data.get('url')

if not url:

raise forms.ValidationError('Please enter a url.')

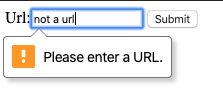

This instantiates a new form with a single field for a URL. I can set the max_length the field takes as an argument and raise a validation error if the input isn’t recognized as a url:

This validation can act as an initial line of defense against attacks. Urllib was susceptible to HTTP header injection and “request smuggling,” where “an attacker could inject a whole extra HTTP request into the stream with URLs like:”

_http://127.0.0.1%0d%0aConnection%3a%20Keep-Alive%0d%0a%0d%0aPOST%20%2fbar%20HTTP%2f1.1%0d%0aHost%3a%20127.0.0.1%0d%0aContent-Length%3a%2031%0d%0a%0d%0a%7b%22new%22%3a%22json%22%2c%22content%22%3a%22here%22%7d%0d%0a:12345/foo_

That url is 224 characters long and fails url validation so it wouldn’t even be accepted to the form, even before security checks within the more recent, patched urllib stopped it.

Regarding the rest of the comments, I can limit the number of redirects by checking status codes and use url_has_allowed_host_and_scheme() from the django.utils.http library to match the url to allowed hosts.

Will that fix all the security holes? Probably not, but then the reviewer of my next PR will help me dive deeper and uncover, and fix until we are both confident the code is secure. In this way, the reviewer is teaching me what is important and how to think about security and other issues, making me a better developer.

Conclusion

Small teams should have quick cadence. That is why security often gets forgotten. Security implications get accidentally overlooked because of a laser-focus on product.

But when within a code review framework, this isn’t true.Code review raises standards. The reviewer can act as a guide, finding critical issues and pointing the developer in the right direction to get to the fix quick.

In fact, used properly, code review can get to the answer much quicker than I did. The mistake I made here and with other PRs is that I didn’t ask for further advice. From another recent review:

Feel free to reply if you need further clarification.

—Code reviewer Oliver E.

I went and found the answers to these myself instead of asking for “further clarification.” If I had done so, these issues could have been fixed much quicker, allowing me to move on to the important logic of my code. In my next article, I will go into the dynamics of working with external reviewers to build a much larger team of teachers and advisers and, ultimately, build a product a lot faster.