About the author

Nikhil Pal Singh is a repeat technical founder from Sydney, Australia with over 20 years of experience building software at companies like Lucent Technologies and Yahoo. Most recently, he founded TekTorch.AI: an innovation lab that helps businesses use state-of-the-art AI and ML to improve operations.

Over the past five years, Nikhil has been managing development teams of 15+ engineers.

Follow Nikhil on Twitter @techies

Every product in the market today has to compete with similar products and aim to achieve market dominance. The number of competitors for most products is so numerous that consumers can switch instantly to other options with a click of a button, seeking the optimum experience to satisfy their requirements at the lowest price point.

One of the primary factors which affect customer experience is the speed of the application. It is estimated that when loading time goes up by 1 second, customer satisfaction goes down by 16%. That is a significant negative impact. This can be precisely when a customer decides to move on to an alternative capable of delivering the expected results faster. Such a shift leads to the loss of users who are very difficult to win back, and consequently, the overall product reputation takes a big hit. Therefore, it is crucial to remain on edge and make sure your users aren’t put in a position where they need to consider other options. The best way to do this is to track the different modules of the application and remove the root cause obstacles.

A stable monitoring system can help identify the causes of slow behavior and indicate sections of the application from which the errors pop up, causing disturbances in the application’s pipeline.

Here are some essential things to keep in mind to optimize for application speed and keep your users happy:

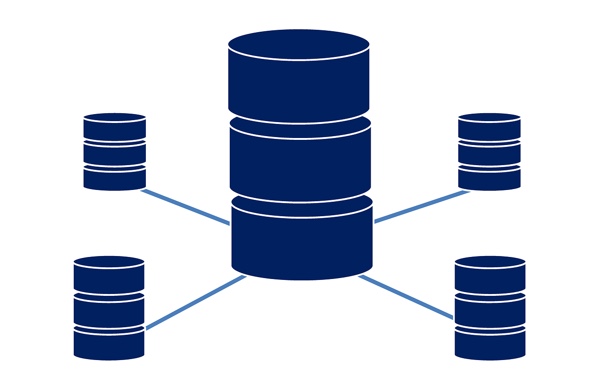

Apply monitoring to your servers

Often, applications are slow in terms of processing and loading information. However, it is often hard to notice that the servers on which the applications are running are causing the application’s performance to suffer. Usually, multiple servers are required to interact with each other to establish an end-to-end working application. DNS servers, front-end-servers, application servers, and several middleware servers are a few examples of those required to run an application optimally. This means there are multiple opportunities for breakage and overall performance degradation. Weak or disrupted interactions between any of these can slow down the applications considerably.

To avoid this, it is vital to identify the weak links and further strengthen them. A popular and effective way of doing this is to employ a monitoring system and configure it at suitable points where monitoring is required. This keeps track of the interconnections and helps easily track down the root cause of any problems that may arise. Also, it is advisable to use servers that are close to the user group. This increases the spontaneity of the application since the time between requests and responses get cut down.

Optimize database queries & expect strain with scale

Applications can run effectively on small, minimally provisioned databases during the testing or quality assurance phase. However, when the product is required to work with large datasets in production after it is deployed and released to the end customers, the database keeps growing at increasing rates as the number of clients grows. For instance, when servers like middleware servers make multiple requests to the database, the application slows down due to heavy queries requiring exhaustive processing.

Like servers, a monitoring system on the database layer will help you swiftly track down the cause behind the slow processing and might even suggest ways to avoid the delays altogether. Sometimes, just writing a more optimized SQL query can save several seconds and result in speeding up the application manifold.

Monitor your networks

It can so happen that the network providers slow down applications through generally slow services. But sometimes the network services themselves are not to blame. Instead, the reasons may sometimes be the services on which the network depends ultimately set off unexpected delays.

As you can imagine, such unexpected causes are usually complicated to identify since the cause may be nested several layers deep. They are even harder to resolve. Monitoring the network, like the others, can help diminish the pain points by trying to optimize the identification process and arm with information to better design or choose a network solution.

Leverage third-party libraries thoughtfully

Open source coding is a wonderful culture that allows the integration of already developed code into the current development process. However, this same advantage leads to a significant problem. Some developers often allow important parts of their applications to rely entirely on third-party tools, libraries, or SDKs. There is a tremendous benefit to this; it saves the team from writing complex code from scratch by employing a battle-tested, pre-written solution. By embracing third-party libraries, you’re also relinquishing control of your application. And it may be that the libraries or SDKs are not optimized. A using a library that isn’t optimized for performance generally means that your application won’t be optimized for performance. And diagnosing issues related to someone else’s code is usually harder than figuring out what’s wrong with your own.

Therefore, it is always advisable to go through the code of any third-party library or SDK to check for optimizations and repetitive bits, which might slow down the product. Code snippets that unnecessarily burden the application should be removed for faster execution. Maintaining a log file to record the application’s vitals can be very useful for tracking down such points in outsourced code.

Optimize for new version adoption

Old software versions, used by the customer, may not be compatible with innovations with respect to frameworks and compilers. Outdated software versions may not be able to process a code snippet designed to enhance the application. Thus, despite ample measures taken from the service providers' side, the applications might slow down due to outdated software or tools on the user end.

Real User Monitoring, which provides data about the user, can provide the exact details necessary to identify if the cause for slow performance comes from the user’s side. After that, necessary recommendations can be provided to the customer to ensure a premium experience. I strongly recommend making a version update or upgrade experience very easy for your users.

If you found this useful, check out other articles from Nikhil such as:

Be sure to follow Nikhil on Twitter @techies